Welcome to the Mobile Manipulation Tutorial (MoManTu).

This is the webpage for the tutorial in the (journal to be announced). Find the overview paper here (link to open access paper provided once it is accepted) and the detailed tutorial here (dito), while the code is hosted on https://github.com/momantu.

The tutorial is still under development! Please use with caution.

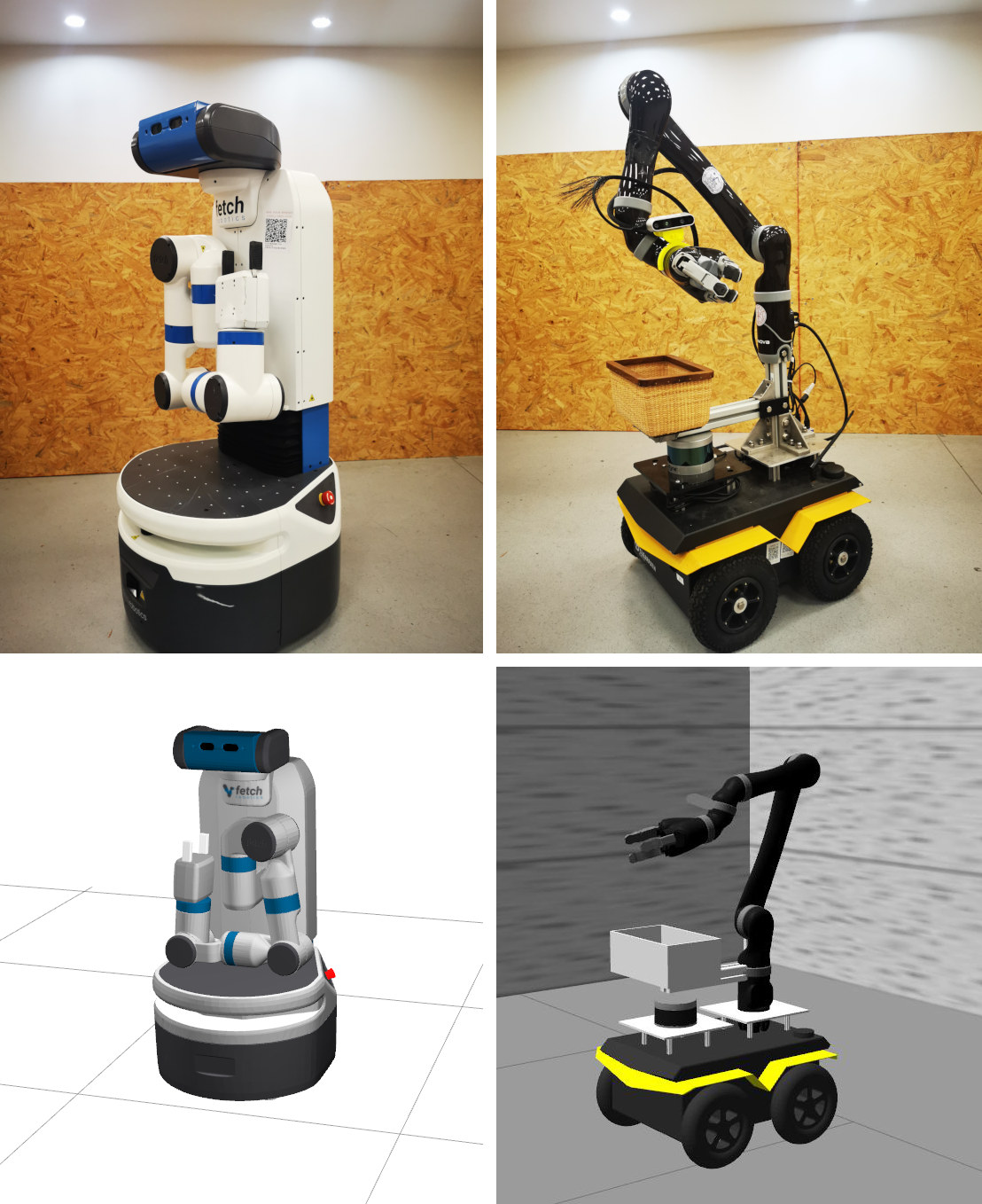

The tutorial teaches how to program a mobile robot with a robot arm to do mobile manipulation. The example systems are a Fetch robot and a Clearpath Jaca with a Kinova Jaco attached - see the image below.

Figure 1: The robots used in the MoManTu: Fetch and Jackal + Kinova

(top left and right) and their simulated versions below.

The tutorial is developed by the Mobile Autonomous Robotic Systems Lab (MARS Lab) and the Living Machines Lab (LIMA Lab) of the ShanghaiTech Automation and Robotics Center (STAR Center), School of Information Science and Technology (SIST) of ShanghaiTech University.

We welcome comments, suggestions and potentially even code contributions to the tutorial. Please see Getting Help to contact us.

Below two videos show the demo on a real and a simulated Fetch robot:

Docker

The easiest way to get started with playing with the tutorial is to use the provided Docker image.

To pull the docker image and run the demo use:

docker run --name momantu -i -t -p 6900:5900 -e RESOLUTION=1920x1080 yz16/momantu

Then open a VNC client (e.g. RealVNC) and connect to 127.0.0.1:6900. The workspaces are located in the root folder and ready to use.

Run MoManTu

Launch the simulator and the MoManTu software by running this command in the terminal:

roslaunch fetch_sim demo.launch

The pose estimation module based on NOCS, which runs under a conda environment of Python 3.5. Activate the environment and launch the object pose estimation module with:

conda activate NOCS #activate environment

source ~/nocs_ws/devel/setup.bash

roslaunch nocs_srv pose_estimation_server.launch

Finally, launch the flexbe_app and robot_server node to connect other modules and start the demo:

roslaunch fetch_sim robot_service.launch

Sources

The sources for the MoManTu are on GitHub: https://github.com/momantu.

The MoManTu Fetch demo is found here: https://github.com/momantu/momantu_fetch

The MoManTu Jackal Kinova demo is todo.

Online Packages:

| Role | Package | URL |

|---|---|---|

| Localization | AMCL | link |

| Local Costmap | ROS Navigation | link |

| Path Planning | ROS Navigation | link |

| Path Following | ROS Navigation | link |

| Arm Control 1 | kinova_ros | link |

| Arm Control 2 | fetch_ros | link |

| Category Detection & Pose | NOCS | link |

| Object Detection | Pose | (todo) |

| Object Place Pose | AprilTag_ROS | link |

| Grasp Planning | / | / |

| Arm Planning 1 & IK | MoveIt Pick and Place | link |

| Arm Planning 2 & IK | MoveIt directly (todo) | link |

| Human Robot Interaction 1 | RViz & FlexBE App | link |

| Human Robot Interaction 2 | Speech (todo) | (todo) |

| Decision Making | FlexBE | link |

Offline Packages:

| Role | Package | URL |

|---|---|---|

| Simulation World Building | Gazebo | link |

| SLAM 2D | Cartographer | link |

| SLAM 3D | Cartographer (todo) | link |

| Camera Calibration | camera_calibration | link |

| Camera Pose Detection | AprilTag_ROS | link |

| Hand-eye Calibration | easy_handeye | link |

Getting Help

You are welcome to post issues on the momantu fetch repo to get help. You may also send an email to Hou Jiawei or Prof. Sören Schwertfeger.

Sensor Log

A ROS bagfile with the sensor data from a real fetch run is avaiable (3.4GB).

Citing

Please cite our paper if you use the tutorial in your research.

(bibtex citation to be provided)